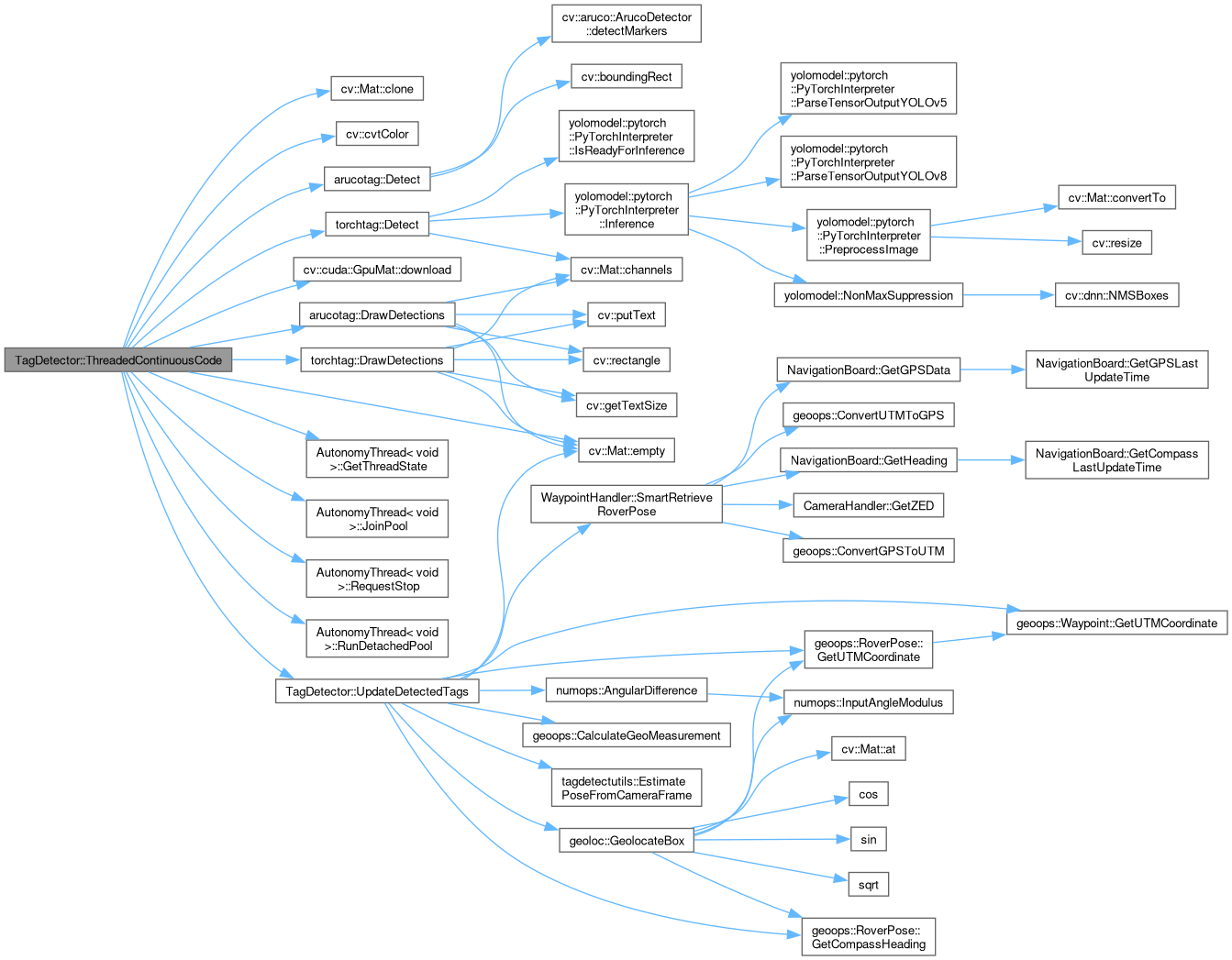

This code will run continuously in a separate thread. New frames from the given camera are grabbed and the tags for the camera image are detected, filtered, and stored. Then any requests for the current tags are fulfilled.

177{

178

179 if (m_bUsingZedCamera)

180 {

181

182 if (!std::dynamic_pointer_cast<ZEDCamera>(m_pCamera)->GetCameraIsOpen())

183 {

184

185 m_bCameraIsOpened = false;

186

187

189 {

190

192

193

194 LOG_CRITICAL(logging::g_qSharedLogger,

195 "TagDetector start was attempted for ZED camera with serial number {}, but camera never properly opened or it has been closed/rebooted! "

196 "This tag detector will now stop.",

197 std::dynamic_pointer_cast<ZEDCamera>(m_pCamera)->GetCameraSerial());

198 }

199 }

200 else

201 {

202

203 m_bCameraIsOpened = true;

204 }

205 }

206 else

207 {

208

209 if (!std::dynamic_pointer_cast<BasicCamera>(m_pCamera)->GetCameraIsOpen())

210 {

211

212 m_bCameraIsOpened = false;

213

214

216 {

217

219

220

221 LOG_CRITICAL(logging::g_qSharedLogger,

222 "TagDetector start was attempted for BasicCam at {}, but camera never properly opened or it has become disconnected!",

223 std::dynamic_pointer_cast<BasicCamera>(m_pCamera)->GetCameraLocation());

224 }

225 }

226 else

227 {

228

229 m_bCameraIsOpened = true;

230 }

231 }

232

233

234 if (m_bCameraIsOpened)

235 {

236

237 std::future<bool> fuPointCloudCopyStatus;

238 std::future<bool> fuRegularFrameCopyStatus;

239

240

241 if (m_bUsingZedCamera)

242 {

243

244 if (m_bUsingGpuMats)

245 {

246

247 fuPointCloudCopyStatus = std::dynamic_pointer_cast<ZEDCamera>(m_pCamera)->RequestPointCloudCopy(m_cvGPUPointCloud);

248

249 fuRegularFrameCopyStatus = std::dynamic_pointer_cast<ZEDCamera>(m_pCamera)->RequestFrameCopy(m_cvGPUFrame);

250

251

252 if (fuPointCloudCopyStatus.get() && fuRegularFrameCopyStatus.get())

253 {

254

255 m_cvGPUPointCloud.

download(m_cvPointCloud);

257

259 }

260 else

261 {

262

263 LOG_WARNING(logging::g_qSharedLogger, "TagDetector unable to get point cloud from ZEDCam!");

264 }

265 }

266 else

267 {

268

269 fuPointCloudCopyStatus = std::dynamic_pointer_cast<ZEDCamera>(m_pCamera)->RequestPointCloudCopy(m_cvPointCloud);

270 fuRegularFrameCopyStatus = std::dynamic_pointer_cast<ZEDCamera>(m_pCamera)->RequestFrameCopy(m_cvFrame);

271

272

273 if (!fuPointCloudCopyStatus.get())

274 {

275

276 LOG_WARNING(logging::g_qSharedLogger, "TagDetector unable to get point cloud from ZEDCam!");

277 }

278 if (!fuRegularFrameCopyStatus.get())

279 {

280

281 LOG_WARNING(logging::g_qSharedLogger, "TagDetector unable to get regular frame from ZEDCam!");

282 }

283 }

284 }

285 else

286 {

287

288 fuRegularFrameCopyStatus = std::dynamic_pointer_cast<BasicCamera>(m_pCamera)->RequestFrameCopy(m_cvFrame);

289

290

291 if (!fuRegularFrameCopyStatus.get())

292 {

293

294 LOG_WARNING(logging::g_qSharedLogger, "TagDetector unable to get RGB image from BasicCam!");

295 }

296 }

297

299

301

302 if (m_cvFrame.

empty())

303 {

304

305 LOG_WARNING(logging::g_qSharedLogger, "Frame from camera is empty!");

306 return;

307 }

308

309

310 m_vNewlyDetectedTags.clear();

311

312 m_cvArucoProcFrame = m_cvFrame.

clone();

313

314 std::vector<tagdetectutils::ArucoTag> vNewOpenCVTags =

arucotag::Detect(m_cvArucoProcFrame, m_cvArucoDetector);

315

316 m_vNewlyDetectedTags.insert(m_vNewlyDetectedTags.end(), vNewOpenCVTags.begin(), vNewOpenCVTags.end());

317

318

319 if (m_bTorchEnabled)

320 {

321

322 std::vector<tagdetectutils::ArucoTag> vNewTorchTags =

323 torchtag::Detect(m_cvArucoProcFrame, *m_pTorchDetector, m_fTorchMinObjectConfidence, m_fTorchNMSThreshold);

324

325 m_vNewlyDetectedTags.insert(m_vNewlyDetectedTags.end(), vNewTorchTags.begin(), vNewTorchTags.end());

326 }

327

328

330 {

331

332 stTag.dHorizontalFOV = m_pCamera->GetPropHorizontalFOV();

333 }

334

335

337

338

342 }

343

344

345 std::shared_lock<std::shared_mutex> lkSchedulers(m_muPoolScheduleMutex);

346

347 if (!m_qDetectedTagDrawnOverlayFramesCopySchedule.empty() || !m_qDetectedArucoTagCopySchedule.empty())

348 {

349 size_t siQueueLength = m_qDetectedTagDrawnOverlayFramesCopySchedule.size() + m_qDetectedArucoTagCopySchedule.size();

350

351 this->

RunDetachedPool(siQueueLength, m_nNumDetectedTagsRetrievalThreads);

352

354

355 lkSchedulers.unlock();

356 }

357}

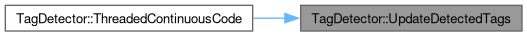

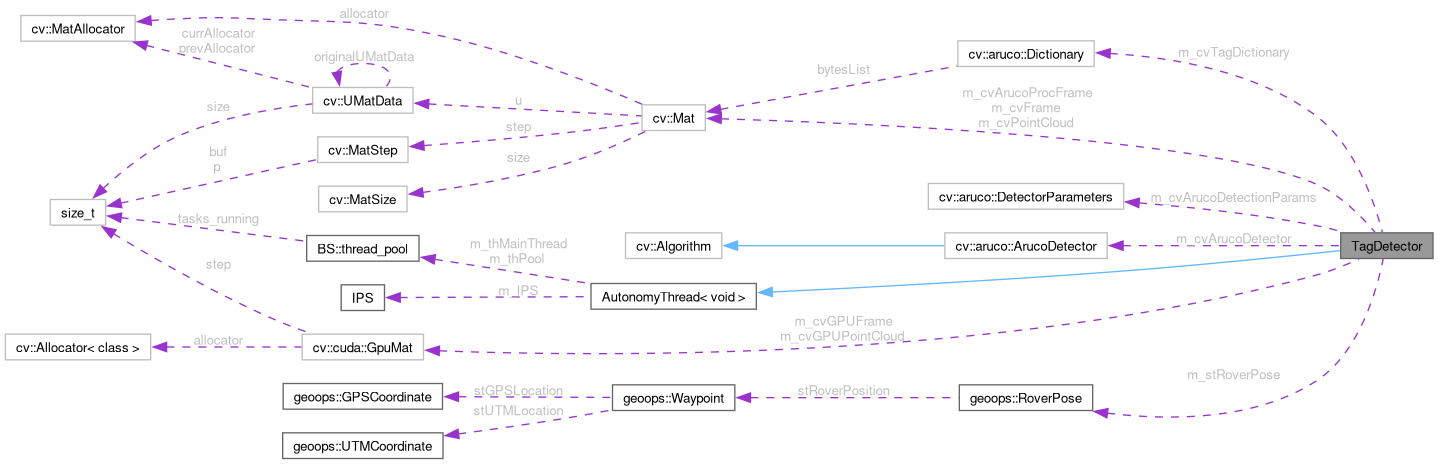

void RunDetachedPool(const unsigned int nNumTasksToQueue, const unsigned int nNumThreads=2, const bool bForceStopCurrentThreads=false)

When this method is called, it starts a thread pool full of threads that don't return std::futures (l...

Definition AutonomyThread.hpp:336

void JoinPool()

Waits for pool to finish executing tasks. This method will block the calling code until thread is fin...

Definition AutonomyThread.hpp:439

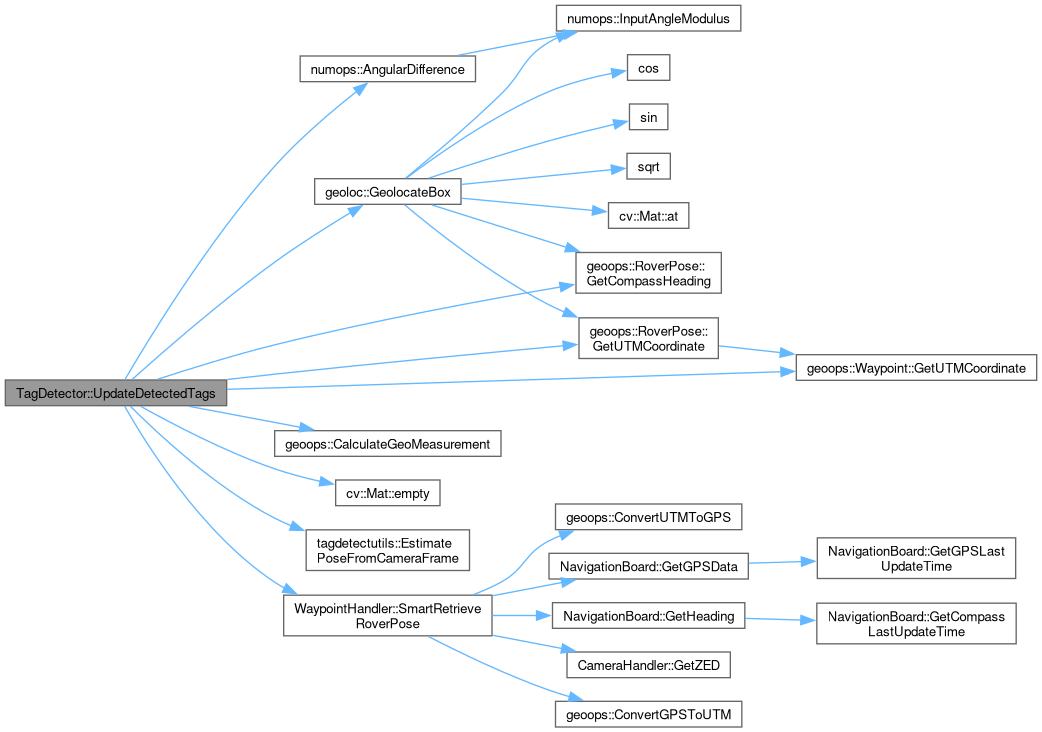

void UpdateDetectedTags(std::vector< tagdetectutils::ArucoTag > &vNewlyDetectedTags)

Updates the detected torch tags including tracking the detected tags over time and removing tags that...

Definition TagDetector.cpp:695

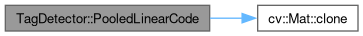

CV_NODISCARD_STD Mat clone() const

void download(OutputArray dst) const

void cvtColor(InputArray src, OutputArray dst, int code, int dstCn=0)

std::vector< tagdetectutils::ArucoTag > Detect(const cv::Mat &cvFrame, const cv::aruco::ArucoDetector &cvArucoDetector)

Detect ArUco tags in the provided image.

Definition ArucoDetection.hpp:93

void DrawDetections(cv::Mat &cvDetectionsFrame, const std::vector< tagdetectutils::ArucoTag > &vDetectedTags)

Given a vector of tagdetectutils::ArucoTag structs draw each tag corner and ID onto the given image.

Definition ArucoDetection.hpp:138

void DrawDetections(cv::Mat &cvDetectionsFrame, const std::vector< tagdetectutils::ArucoTag > &vDetectedTags)

Given a vector of tagdetectutils::ArucoTag structs draw each tag corner and confidence onto the given...

Definition TorchTagDetection.hpp:103

std::vector< tagdetectutils::ArucoTag > Detect(const cv::Mat &cvFrame, yolomodel::pytorch::PyTorchInterpreter &trPyTorchDetector, const float fMinObjectConfidence=0.40f, const float fNMSThreshold=0.60f)

Detect ArUco tags in the provided image using a YOLO DNN model.

Definition TorchTagDetection.hpp:45

Represents a single ArUco tag. Combines attributes from TorchTag, TensorflowTag, and the original Aru...

Definition TagDetectionUtilty.hpp:59

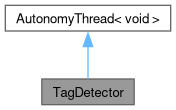

Public Member Functions inherited from

Public Member Functions inherited from