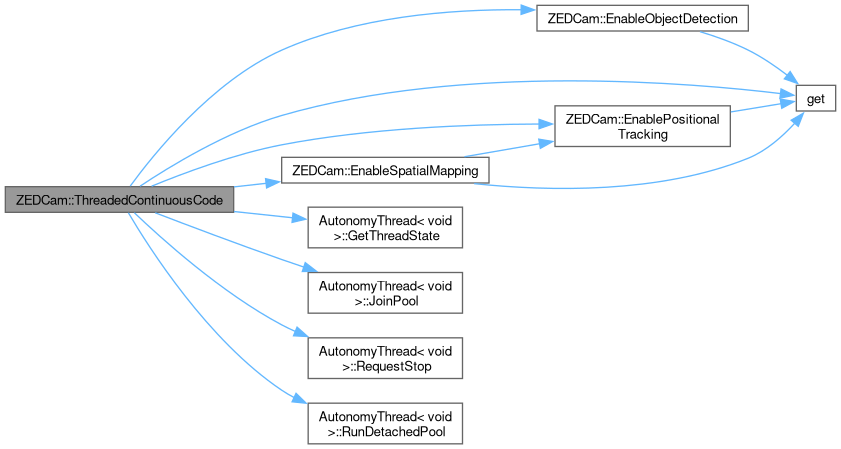

The code inside this private method runs in a separate thread, but still has access to this*. This method continuously calls the grab() function of the ZEDSDK, which updates all frames (RGB, depth, cloud) and all other data such as positional and spatial mapping. Then a thread pool is started and joined once per iteration to mass copy the frames and/or measure to any other thread waiting in the queues.

290{

291

292 std::shared_lock<std::shared_mutex> lkReadCameraLock(m_muCameraMutex);

293

294 if (!m_slCamera.isOpened())

295 {

296

297 lkReadCameraLock.unlock();

298

299

301 {

302

304

305 LOG_CRITICAL(logging::g_qSharedLogger,

306 "Camera start was attempted for ZED camera with serial number {}, but camera never properly opened or it has been closed/rebooted!",

307 m_unCameraSerialNumber);

308 }

309 else

310 {

311 std::chrono::time_point tmCurrentTime = std::chrono::system_clock::now();

312

313 int nTimeSinceEpoch = std::chrono::duration_cast<std::chrono::seconds>(tmCurrentTime.time_since_epoch()).count();

314

315

316 if (nTimeSinceEpoch % 5 == 0 && !m_bCameraReopenAlreadyChecked)

317 {

318

319 std::unique_lock<std::shared_mutex> lkWriteCameraLock(m_muCameraMutex);

320

321 sl::ERROR_CODE slReturnCode = m_slCamera.open(m_slCameraParams);

322

323 lkWriteCameraLock.unlock();

324

325

326 if (slReturnCode == sl::ERROR_CODE::SUCCESS)

327 {

328

329 LOG_INFO(logging::g_qSharedLogger, "ZED stereo camera with serial number {} has been reconnected and reopened!", m_unCameraSerialNumber);

330

331

332 if (m_bCameraIsFusionMaster)

333 {

334

335 std::unique_lock<std::shared_mutex> lkWriteCameraLock(m_muCameraMutex);

336

337 m_slCamera.startPublishing();

338

339 lkWriteCameraLock.unlock();

340 }

341

342

343 if (!m_slCamera.isPositionalTrackingEnabled())

344 {

346 }

347 else

348 {

349

350 LOG_ERROR(logging::g_qSharedLogger,

351 "After reopening ZED stereo camera with serial number {}, positional tracking failed to reinitialize. sl::ERROR_CODE is: {}",

352 m_unCameraSerialNumber,

353 sl::toString(slReturnCode).

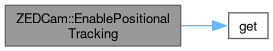

get());

354 }

355

356 if (m_slCamera.getSpatialMappingState() != sl::SPATIAL_MAPPING_STATE::OK)

357 {

359 }

360 else

361 {

362

363 LOG_ERROR(logging::g_qSharedLogger,

364 "After reopening ZED stereo camera with serial number {}, spatial mapping failed to reinitialize. sl::ERROR_CODE is: {}",

365 m_unCameraSerialNumber,

366 sl::toString(slReturnCode).

get());

367 }

368

369 if (!m_slCamera.isObjectDetectionEnabled())

370 {

372 }

373 else

374 {

375

376 LOG_ERROR(logging::g_qSharedLogger,

377 "After reopening ZED stereo camera with serial number {}, object detection failed to reinitialize. sl::ERROR_CODE is: {}",

378 m_unCameraSerialNumber,

379 sl::toString(slReturnCode).

get());

380 }

381 }

382 else

383 {

384

385 LOG_WARNING(logging::g_qSharedLogger,

386 "Attempt to reopen ZED stereo camera with serial number {} has failed! Trying again in 5 seconds...",

387 m_unCameraSerialNumber);

388 }

389

390

391 m_bCameraReopenAlreadyChecked = true;

392 }

393 else if (nTimeSinceEpoch % 5 != 0)

394 {

395

396 m_bCameraReopenAlreadyChecked = false;

397 }

398 }

399 }

400 else

401 {

402

403 lkReadCameraLock.unlock();

404

405 std::unique_lock<std::shared_mutex> lkWriteCameraLock(m_muCameraMutex);

406

407 sl::ERROR_CODE slReturnCode = m_slCamera.grab(m_slRuntimeParams);

408

409 lkWriteCameraLock.unlock();

410

411

412 if (m_bCameraIsFusionMaster)

413 {

414

415 std::unique_lock<std::shared_mutex> lkFusionLock(m_muFusionMutex);

416

417 sl::FUSION_ERROR_CODE slReturnCode = m_slFusionInstance.process();

418

419 lkFusionLock.unlock();

420

421

422 if (slReturnCode != sl::FUSION_ERROR_CODE::SUCCESS && slReturnCode != sl::FUSION_ERROR_CODE::NO_NEW_DATA_AVAILABLE)

423 {

424

425 LOG_WARNING(logging::g_qSharedLogger,

426 "Unable to process fusion data for camera {} ({})! sl::FUSION_ERROR_CODE is: {}",

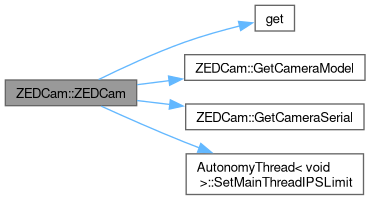

427 sl::toString(m_slCameraModel).

get(),

428 m_unCameraSerialNumber,

429 sl::toString(slReturnCode).

get());

430 }

431 }

432

433

434 if (slReturnCode == sl::ERROR_CODE::SUCCESS)

435 {

436

437 if (m_bNormalFramesQueued.load(ATOMIC_MEMORY_ORDER_METHOD))

438 {

439

440 slReturnCode = m_slCamera.retrieveImage(m_slFrame, constants::ZED_RETRIEVE_VIEW, m_slMemoryType, sl::Resolution(m_nPropResolutionX, m_nPropResolutionY));

441

442 if (slReturnCode != sl::ERROR_CODE::SUCCESS)

443 {

444

445 LOG_WARNING(logging::g_qSharedLogger,

446 "Unable to retrieve new frame image for stereo camera {} ({})! sl::ERROR_CODE is: {}",

447 sl::toString(m_slCameraModel).

get(),

448 m_unCameraSerialNumber,

449 sl::toString(slReturnCode).

get());

450 }

451 }

452

453

454 if (m_bDepthFramesQueued.load(ATOMIC_MEMORY_ORDER_METHOD))

455 {

456

457 slReturnCode = m_slCamera.retrieveMeasure(m_slDepthMeasure, m_slDepthMeasureType, m_slMemoryType, sl::Resolution(m_nPropResolutionX, m_nPropResolutionY));

458

459 if (slReturnCode != sl::ERROR_CODE::SUCCESS)

460 {

461

462 LOG_WARNING(logging::g_qSharedLogger,

463 "Unable to retrieve new depth measure for stereo camera {} ({})! sl::ERROR_CODE is: {}",

464 sl::toString(m_slCameraModel).

get(),

465 m_unCameraSerialNumber,

466 sl::toString(slReturnCode).

get());

467 }

468

469

470 slReturnCode = m_slCamera.retrieveImage(m_slDepthImage, sl::VIEW::DEPTH, m_slMemoryType, sl::Resolution(m_nPropResolutionX, m_nPropResolutionY));

471

472 if (slReturnCode != sl::ERROR_CODE::SUCCESS)

473 {

474

475 LOG_WARNING(logging::g_qSharedLogger,

476 "Unable to retrieve new depth image for stereo camera {} ({})! sl::ERROR_CODE is: {}",

477 sl::toString(m_slCameraModel).

get(),

478 m_unCameraSerialNumber,

479 sl::toString(slReturnCode).

get());

480 }

481 }

482

483

484 if (m_bPointCloudsQueued.load(ATOMIC_MEMORY_ORDER_METHOD))

485 {

486

487 slReturnCode = m_slCamera.retrieveMeasure(m_slPointCloud, sl::MEASURE::XYZBGRA, m_slMemoryType, sl::Resolution(m_nPropResolutionX, m_nPropResolutionY));

488

489 if (slReturnCode != sl::ERROR_CODE::SUCCESS)

490 {

491

492 LOG_WARNING(logging::g_qSharedLogger,

493 "Unable to retrieve new point cloud for stereo camera {} ({})! sl::ERROR_CODE is: {}",

494 sl::toString(m_slCameraModel).

get(),

495 m_unCameraSerialNumber,

496 sl::toString(slReturnCode).

get());

497 }

498 }

499

500

501 if (m_slCamera.isPositionalTrackingEnabled())

502 {

503

504 if (m_bPosesQueued.load(ATOMIC_MEMORY_ORDER_METHOD))

505 {

506

507 sl::POSITIONAL_TRACKING_STATE slPoseTrackReturnCode;

508

509

510 if (m_bCameraIsFusionMaster)

511 {

512

513 slPoseTrackReturnCode = m_slFusionInstance.getPosition(m_slCameraPose, sl::REFERENCE_FRAME::WORLD);

514 }

515 else

516 {

517

518 slPoseTrackReturnCode = m_slCamera.getPosition(m_slCameraPose, sl::REFERENCE_FRAME::WORLD);

519 }

520

521 if (slPoseTrackReturnCode != sl::POSITIONAL_TRACKING_STATE::OK)

522 {

523

524 LOG_WARNING(logging::g_qSharedLogger,

525 "Unable to retrieve new positional tracking pose for stereo camera {} ({})! sl::POSITIONAL_TRACKING_STATE is: {}",

526 sl::toString(m_slCameraModel).

get(),

527 m_unCameraSerialNumber,

528 sl::toString(slPoseTrackReturnCode).

get());

529 }

530 }

531

532

533 if (m_bCameraIsFusionMaster && m_bGeoPosesQueued.load(ATOMIC_MEMORY_ORDER_METHOD))

534 {

535

536 sl::GNSS_FUSION_STATUS slGeoPoseTrackReturnCode = m_slFusionInstance.getGeoPose(m_slFusionGeoPose);

537

538 if (slGeoPoseTrackReturnCode != sl::GNSS_FUSION_STATUS::OK && slGeoPoseTrackReturnCode != sl::GNSS_FUSION_STATUS::CALIBRATION_IN_PROGRESS)

539 {

540

541 LOG_WARNING(logging::g_qSharedLogger,

542 "Geo pose tracking state for stereo camera {} ({}) is suboptimal! sl::GNSS_FUSION_STATUS is: {}",

543 sl::toString(m_slCameraModel).

get(),

544 m_unCameraSerialNumber,

545 sl::toString(slGeoPoseTrackReturnCode).

get());

546 }

547 }

548

549

550 if (m_bFloorsQueued.load(ATOMIC_MEMORY_ORDER_METHOD))

551 {

552

553 slReturnCode = m_slCamera.findFloorPlane(m_slFloorPlane,

554 m_slFloorTrackingTransform,

555 m_slCameraPose.getTranslation().y,

556 m_slCameraPose.getRotationMatrix(),

557 m_fExpectedCameraHeightFromFloorTolerance);

558

559 if (slReturnCode != sl::ERROR_CODE::SUCCESS)

560 {

561

562 LOG_WARNING(logging::g_qSharedLogger,

563 "Unable to retrieve new floor plane for stereo camera {} ({})! sl::ERROR_CODE is: {}",

564 sl::toString(m_slCameraModel).

get(),

565 m_unCameraSerialNumber,

566 sl::toString(slReturnCode).

get());

567 }

568 }

569 }

570

571

572 if (m_bSensorsQueued.load(ATOMIC_MEMORY_ORDER_METHOD))

573 {

574

575 slReturnCode = m_slCamera.getSensorsData(m_slSensorsData, sl::TIME_REFERENCE::CURRENT);

576

577

578 if (slReturnCode != sl::ERROR_CODE::SUCCESS)

579 {

580

581 LOG_WARNING(logging::g_qSharedLogger,

582 "Unable to retrieve sensor data for stereo camera {} ({})! sl::ERROR_CODE is: {}",

583 sl::toString(m_slCameraModel).

get(),

584 m_unCameraSerialNumber,

585 sl::toString(slReturnCode).

get());

586 }

587 }

588

589

590 if (m_slCamera.isObjectDetectionEnabled())

591 {

592

593 if (m_bObjectsQueued.load(ATOMIC_MEMORY_ORDER_METHOD))

594 {

595

596 slReturnCode = m_slCamera.retrieveObjects(m_slDetectedObjects);

597

598 if (slReturnCode != sl::ERROR_CODE::SUCCESS)

599 {

600

601 LOG_WARNING(logging::g_qSharedLogger,

602 "Unable to retrieve new object data for stereo camera {} ({})! sl::ERROR_CODE is: {}",

603 sl::toString(m_slCameraModel).

get(),

604 m_unCameraSerialNumber,

605 sl::toString(slReturnCode).

get());

606 }

607 }

608

609

610 if (m_slObjectDetectionBatchParams.enable)

611 {

612

613 if (m_bBatchedObjectsQueued.load(ATOMIC_MEMORY_ORDER_METHOD))

614 {

615

616 slReturnCode = m_slCamera.getObjectsBatch(m_slDetectedObjectsBatched);

617

618 if (slReturnCode != sl::ERROR_CODE::SUCCESS)

619 {

620

621 LOG_WARNING(logging::g_qSharedLogger,

622 "Unable to retrieve new batched object data for stereo camera {} ({})! sl::ERROR_CODE is: {}",

623 sl::toString(m_slCameraModel).

get(),

624 m_unCameraSerialNumber,

625 sl::toString(slReturnCode).

get());

626 }

627 }

628 }

629 }

630

631 else if (m_bObjectsQueued.load(std::memory_order_relaxed) || m_bBatchedObjectsQueued.load(std::memory_order_relaxed))

632 {

633

634 LOG_WARNING(logging::g_qSharedLogger,

635 "Unable to retrieve new object data for stereo camera {} ({})! Object detection is disabled!",

636 sl::toString(m_slCameraModel).

get(),

637 m_unCameraSerialNumber);

638 }

639 }

640 else

641 {

642

643 LOG_ERROR(logging::g_qSharedLogger,

644 "Unable to update stereo camera {} ({}) frames, measurements, and sensors! sl::ERROR_CODE is: {}. Closing camera...",

645 sl::toString(m_slCameraModel).

get(),

646 m_unCameraSerialNumber,

647 sl::toString(slReturnCode).

get());

648

649

650 m_slCamera.close();

651 }

652 }

653

654

655 std::shared_lock<std::shared_mutex> lkSchedulers(m_muPoolScheduleMutex);

656

657 if (!m_qFrameCopySchedule.empty() || !m_qGPUFrameCopySchedule.empty() || !m_qCustomBoxIngestSchedule.empty() || !m_qPoseCopySchedule.empty() ||

658 !m_qGeoPoseCopySchedule.empty() || !m_qFloorCopySchedule.empty() || !m_qSensorsCopySchedule.empty() || !m_qObjectDataCopySchedule.empty() ||

659 !m_qObjectBatchedDataCopySchedule.empty())

660 {

661

662 size_t siTotalQueueLength = m_qFrameCopySchedule.size() + m_qGPUFrameCopySchedule.size() + m_qCustomBoxIngestSchedule.size() + m_qPoseCopySchedule.size() +

663 m_qGeoPoseCopySchedule.size() + m_qFloorCopySchedule.size() + m_qSensorsCopySchedule.size() + m_qObjectDataCopySchedule.size() +

664 m_qObjectBatchedDataCopySchedule.size();

665

666

667 this->

RunDetachedPool(siTotalQueueLength, m_nNumFrameRetrievalThreads);

668

669

670 std::chrono::_V2::system_clock::duration tmCurrentTime = std::chrono::high_resolution_clock::now().time_since_epoch();

671

672 if (std::chrono::duration_cast<std::chrono::seconds>(tmCurrentTime).count() % 31 == 0 && !m_bQueueTogglesAlreadyReset)

673 {

674

675 m_bNormalFramesQueued.store(false, ATOMIC_MEMORY_ORDER_METHOD);

676 m_bDepthFramesQueued.store(false, ATOMIC_MEMORY_ORDER_METHOD);

677 m_bPointCloudsQueued.store(false, ATOMIC_MEMORY_ORDER_METHOD);

678 m_bPosesQueued.store(false, ATOMIC_MEMORY_ORDER_METHOD);

679 m_bGeoPosesQueued.store(false, ATOMIC_MEMORY_ORDER_METHOD);

680 m_bFloorsQueued.store(false, ATOMIC_MEMORY_ORDER_METHOD);

681 m_bSensorsQueued.store(false, ATOMIC_MEMORY_ORDER_METHOD);

682 m_bObjectsQueued.store(false, ATOMIC_MEMORY_ORDER_METHOD);

683 m_bBatchedObjectsQueued.store(false, ATOMIC_MEMORY_ORDER_METHOD);

684

685

686 m_bQueueTogglesAlreadyReset = true;

687 }

688

689 else if (m_bQueueTogglesAlreadyReset)

690 {

691

692 m_bQueueTogglesAlreadyReset = false;

693 }

694

695

697 }

698

699

700 lkSchedulers.unlock();

701}

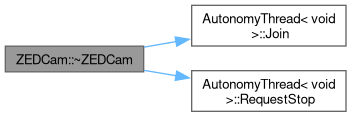

void RunDetachedPool(const unsigned int nNumTasksToQueue, const unsigned int nNumThreads=2, const bool bForceStopCurrentThreads=false)

When this method is called, it starts a thread pool full of threads that don't return std::futures (l...

Definition AutonomyThread.hpp:336

void JoinPool()

Waits for pool to finish executing tasks. This method will block the calling code until thread is fin...

Definition AutonomyThread.hpp:439

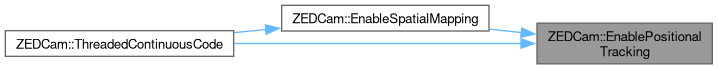

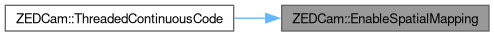

sl::ERROR_CODE EnableSpatialMapping() override

Enabled the spatial mapping feature of the camera. Pose tracking will be enabled if it is not already...

Definition ZEDCam.cpp:2078

sl::ERROR_CODE EnableObjectDetection(const bool bEnableBatching=false) override

Enables the object detection and tracking feature of the camera.

Definition ZEDCam.cpp:2160

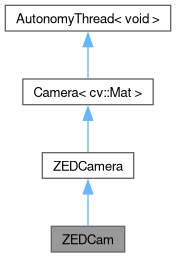

Public Member Functions inherited from

Public Member Functions inherited from