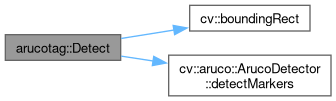

Detect ArUco tags in the provided image.

Create instance variables.

94 {

96 std::vector<int> vIDs;

97 std::vector<std::vector<cv::Point2f>> cvMarkerCorners, cvRejectedCandidates;

98

99

100 cvArucoDetector.

detectMarkers(cvFrame, cvMarkerCorners, vIDs, cvRejectedCandidates);

101

102

103 std::vector<tagdetectutils::ArucoTag> vDetectedTags;

104 vDetectedTags.reserve(vIDs.size());

105

106

107 for (long unsigned int unIter = 0; unIter < vIDs.size(); unIter++)

108 {

109

111 stDetectedTag.nID = vIDs[unIter];

112

113 stDetectedTag.pBoundingBox = std::make_shared<cv::Rect2d>(

cv::boundingRect(cvMarkerCorners[unIter]));

114 stDetectedTag.eDetectionMethod = tagdetectutils::TagDetectionMethod::eOpenCV;

115 stDetectedTag.szClassName = "OpenCVTag";

116 stDetectedTag.cvImageResolution = cvFrame.

size();

117

118

119 vDetectedTags.push_back(stDetectedTag);

120 }

121

122

123 return vDetectedTags;

124 }

void detectMarkers(InputArray image, OutputArrayOfArrays corners, OutputArray ids, OutputArrayOfArrays rejectedImgPoints=noArray()) const

Rect boundingRect(InputArray array)

Represents a single ArUco tag. Combines attributes from TorchTag, TensorflowTag, and the original Aru...

Definition TagDetectionUtilty.hpp:59