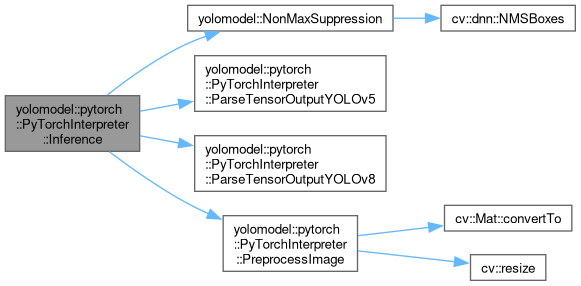

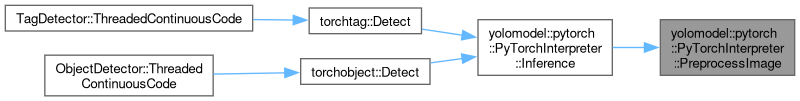

Given an input image forward the image through the YOLO model to run inference on the PyTorch model, then parse and repackage the output tensor data into a vector of easy-to-use Detection structs.

860 {

861

862 torch::set_num_threads(1);

863

864 std::vector<Detection> vObjects;

865

866

867 torch::Tensor trTensorImage =

PreprocessImage(cvInputFrame, m_trDevice);

868

869

870 std::vector<torch::jit::IValue> vInputs;

871 vInputs.push_back(trTensorImage);

872 torch::Tensor trOutputTensor;

873 try

874 {

875 trOutputTensor = m_trModel.forward(vInputs).toTensor();

876 }

877 catch (const c10::Error& trError)

878 {

879 LOG_ERROR(logging::g_qSharedLogger, "Error running inference: {}", trError.what());

880 return vObjects;

881 }

882

883

884 int nImgSize = m_cvModelInputSize.

height;

885 int nP3Stride = std::pow((nImgSize / 8), 2);

886 int nP4Stride = std::pow((nImgSize / 16), 2);

887 int nP5Stride = std::pow((nImgSize / 32), 2);

888

889 int nYOLOv5AnchorsPerGridPoint = 3;

890 int nYOLOv8AnchorsPerGridPoint = 1;

891 int nYOLOv5TotalPredictionLength =

892 (nP3Stride * nYOLOv5AnchorsPerGridPoint) + (nP4Stride * nYOLOv5AnchorsPerGridPoint) + (nP5Stride * nYOLOv5AnchorsPerGridPoint);

893 int nYOLOv8TotalPredictionLength =

894 (nP3Stride * nYOLOv8AnchorsPerGridPoint) + (nP4Stride * nYOLOv8AnchorsPerGridPoint) + (nP5Stride * nYOLOv8AnchorsPerGridPoint);

895

896

897 std::vector<int> vClassIDs;

898 std::vector<std::string> vClassLabels;

899 std::vector<float> vClassConfidences;

900 std::vector<cv::Rect> vBoundingBoxes;

901

902

903 int nLargestDimension = *std::max_element(trOutputTensor.sizes().begin(), trOutputTensor.sizes().end());

904

905 if (nLargestDimension == nYOLOv5TotalPredictionLength)

906 {

907

908 this->

ParseTensorOutputYOLOv5(trOutputTensor, vClassIDs, vClassConfidences, vBoundingBoxes, cvInputFrame.

size(), fMinObjectConfidence);

909 }

910

911 else if (nLargestDimension == nYOLOv8TotalPredictionLength)

912 {

913

914 this->

ParseTensorOutputYOLOv8(trOutputTensor, vClassIDs, vClassConfidences, vBoundingBoxes, cvInputFrame.

size(), fMinObjectConfidence);

915 }

916

917

918 NonMaxSuppression(vObjects, vClassIDs, vClassConfidences, vBoundingBoxes, fMinObjectConfidence, fNMSThreshold);

919

920

921 for (size_t nIter = 0; nIter < vObjects.size(); ++nIter)

922 {

923

924 if (vClassIDs[nIter] >= 0 && vClassIDs[nIter] < static_cast<int>(m_vClassLabels.size()))

925 {

926 vObjects[nIter].szClassName = m_vClassLabels[vClassIDs[nIter]];

927 }

928 else

929 {

930 vObjects[nIter].szClassName = "UnknownClass";

931 }

932 }

933

934 return vObjects;

935 }

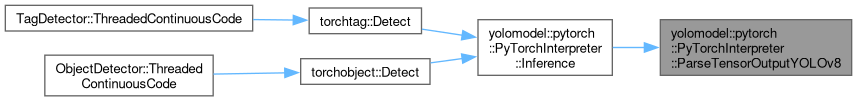

void ParseTensorOutputYOLOv8(const torch::Tensor &trOutput, std::vector< int > &vClassIDs, std::vector< float > &vClassConfidences, std::vector< cv::Rect > &vBoundingBoxes, const cv::Size &cvInputFrameSize, const float fMinObjectConfidence)

Given a tensor output from a YOLOv5 model, parse it's output into something more usable.

Definition YOLOModel.hpp:1101

void ParseTensorOutputYOLOv5(const torch::Tensor &trOutput, std::vector< int > &vClassIDs, std::vector< float > &vClassConfidences, std::vector< cv::Rect > &vBoundingBoxes, const cv::Size &cvInputFrameSize, const float fMinObjectConfidence)

Given a tensor output from a YOLOv5 model, parse it's output into something more usable.

Definition YOLOModel.hpp:993

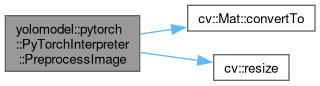

torch::Tensor PreprocessImage(const cv::Mat &cvInputFrame, const torch::Device &trDevice)

Given an input image, preprocess the image to match the input tensor shape of the model,...

Definition YOLOModel.hpp:964

void NonMaxSuppression(std::vector< Detection > &vObjects, std::vector< int > &vClassIDs, std::vector< float > &vClassConfidences, std::vector< cv::Rect > &vBoundingBoxes, float fMinObjectConfidence, float fNMSThreshold)

Perform non max suppression for the given predictions. This eliminates/combines predictions that over...

Definition YOLOModel.hpp:71