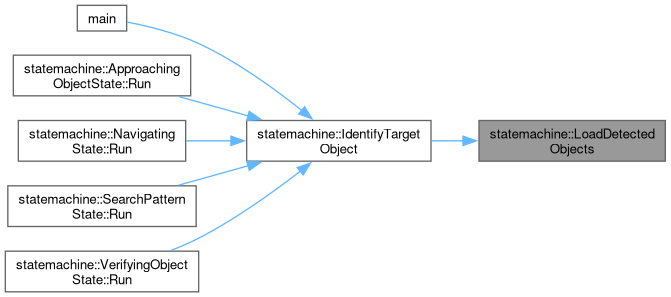

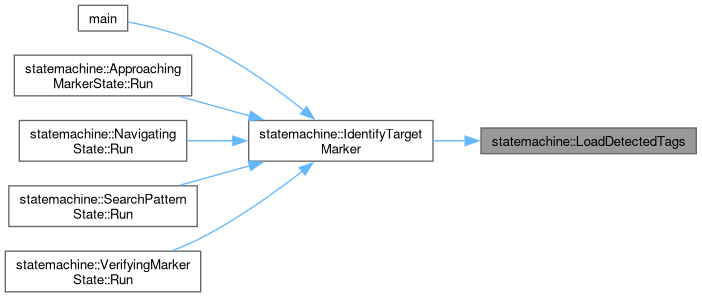

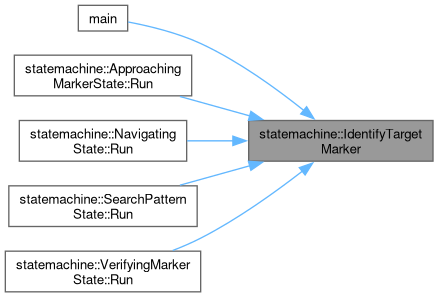

Identify a target object in the rover's vision, using Torch detection.

93 {

94

95 std::vector<objectdetectutils::Object> vDetectedObjects;

97 std::string szIdentifiedObjects = "";

98

99

100 std::chrono::system_clock::time_point tmCurrentTime = std::chrono::system_clock::now();

101

102

104

106 {

107

108 double dObjectTotalAge = std::fabs(std::chrono::duration_cast<std::chrono::milliseconds>(tmCurrentTime - stCandidate.tmCreation).count() / 1000.0);

109

110 double dArea = stCandidate.pBoundingBox->area();

111

112 double dAreaPercentage = (dArea / (stCandidate.cvImageResolution.width * stCandidate.cvImageResolution.height)) * 100.0;

113

114

115 if (stCandidate.dStraightLineDistance <= 0.0)

116 {

117 continue;

118 }

119

120

121 switch (eDesiredDetectionType)

122 {

123 case geoops::WaypointType::eMalletWaypoint:

124 {

125 if (stCandidate.eDetectionType != objectdetectutils::ObjectDetectionType::eMallet)

126 {

127 continue;

128 }

129 break;

130 }

131 case geoops::WaypointType::eWaterBottleWaypoint:

132 {

133 if (stCandidate.eDetectionType != objectdetectutils::ObjectDetectionType::eWaterBottle)

134 {

135 continue;

136 }

137 break;

138 }

139 case geoops::WaypointType::eRockPickWaypoint:

140 {

141 if (stCandidate.eDetectionType != objectdetectutils::ObjectDetectionType::eRockPick)

142 {

143 continue;

144 }

145 break;

146 }

147 case geoops::WaypointType::eObjectWaypoint:

148 {

149 if (stCandidate.eDetectionType != objectdetectutils::ObjectDetectionType::eMallet &&

150 stCandidate.eDetectionType != objectdetectutils::ObjectDetectionType::eWaterBottle &&

151 stCandidate.eDetectionType != objectdetectutils::ObjectDetectionType::eRockPick)

152 {

153 continue;

154 }

155 break;

156 }

157 default:

158 {

159 break;

160 }

161 }

162

163

164 if (stCandidate.eDetectionMethod == objectdetectutils::ObjectDetectionMethod::eTorch)

165 {

166

167 szIdentifiedObjects += "\tObject Class: " + stCandidate.szClassName + " Object Age: " + std::to_string(dObjectTotalAge) +

168 "s Object Screen Percentage: " + std::to_string(dAreaPercentage) + "%\n";

169

170 if (dAreaPercentage < constants::BBOX_MIN_SCREEN_PERCENTAGE || dObjectTotalAge < constants::BBOX_MIN_LIFETIME_THRESHOLD)

171 {

172 continue;

173 }

174

175

176 if (dArea > stBestObject.pBoundingBox->area())

177 {

178

179 stBestObject = stCandidate;

180 }

181 }

182 }

183

184

185 if (stBestObject.dConfidence != 0.0)

186 {

187

188 LOG_DEBUG(logging::g_qSharedLogger, "ObjectDetectionChecker: Identified objects:\n{}", szIdentifiedObjects);

189 }

190

191

192 stObjectTarget = stBestObject;

193

194 return static_cast<int>(vDetectedObjects.size());

195 }

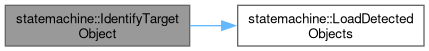

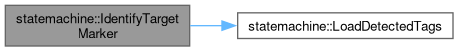

void LoadDetectedObjects(std::vector< objectdetectutils::Object > &vDetectedObjects, const std::vector< std::shared_ptr< ObjectDetector > > &vObjectDetectors)

Aggregates all detected objects from each provided object detector.

Definition ObjectDetectionChecker.hpp:40