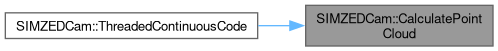

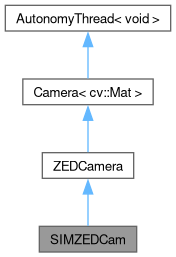

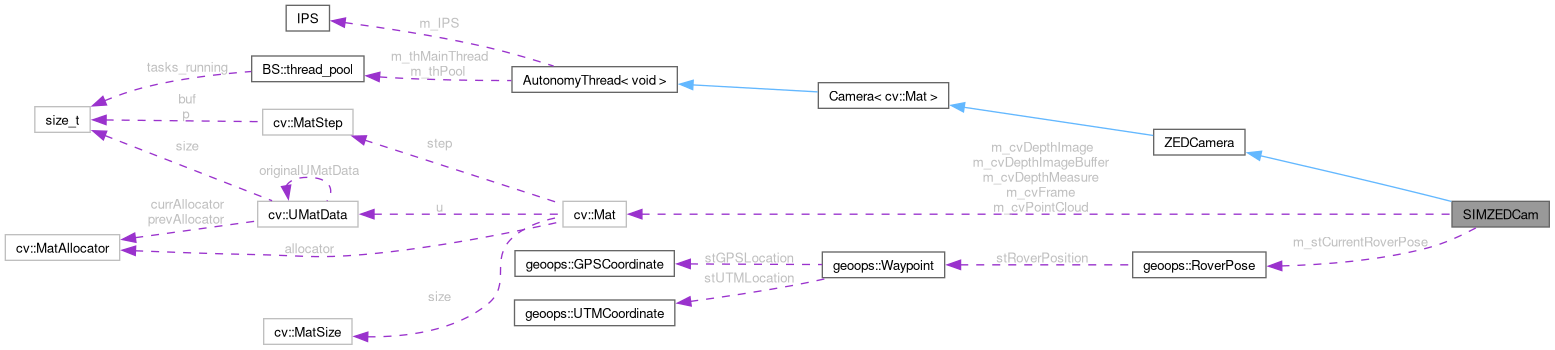

This class implements and interfaces with the SIM cameras and data. It is designed in such a way that multiple other classes/threads can safely call any method of an object of this class withing resource corruption or slowdown of the camera.

More...

|

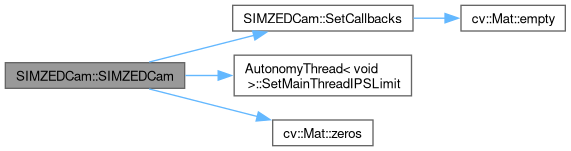

| | SIMZEDCam (const std::string szCameraPath, const int nPropResolutionX, const int nPropResolutionY, const int nPropFramesPerSecond, const double dPropHorizontalFOV, const double dPropVerticalFOV, const bool bEnableRecordingFlag, const int nNumFrameRetrievalThreads=10, const unsigned int unCameraSerialNumber=0) |

| | Construct a new SIM Cam:: SIM Cam object.

|

| |

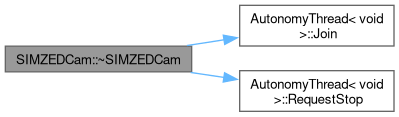

| | ~SIMZEDCam () |

| | Destroy the SIM Cam:: SIM Cam object.

|

| |

| std::future< bool > | RequestFrameCopy (cv::Mat &cvFrame) override |

| | Puts a frame pointer into a queue so a copy of a frame from the camera can be written to it. Remember, this code will be ran in whatever, class/thread calls it.

|

| |

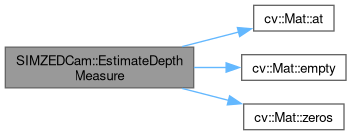

| std::future< bool > | RequestDepthCopy (cv::Mat &cvDepth, const bool bRetrieveMeasure=true) |

| | Requests a depth measure or image from the camera. Puts a frame pointer into a queue so a copy of a frame from the camera can be written to it. This image has the same shape as a grayscale image, but the values represent the depth in MILLIMETERS. The ZEDSDK will always return this measure in MILLIMETERS.

|

| |

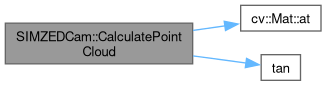

| std::future< bool > | RequestPointCloudCopy (cv::Mat &cvPointCloud) |

| | Requests a point cloud image from the camera. This image has the same resolution as a normal image but with three XYZ values replacing the old color values in the 3rd dimension. The units and sign of the XYZ values are determined by ZED_MEASURE_UNITS and ZED_COORD_SYSTEM constants set in AutonomyConstants.h.

|

| |

| std::future< bool > | RequestPositionalPoseCopy (Pose &stPose) override |

| | Puts a sl::GeoPose pointer into a queue so a copy of a GeoPose from the camera can be written to it.

|

| |

| std::future< bool > | RequestFusionGeoPoseCopy (sl::GeoPose &slGeoPose) override |

| | Puts a sl::GeoPose pointer into a queue so a copy of a GeoPose from the camera can be written to it.

|

| |

| std::future< bool > | RequestSensorsCopy (sl::SensorsData &slSensorsData) override |

| | Requests a copy of the sensors data from the camera.

|

| |

| sl::ERROR_CODE | ResetPositionalTracking () override |

| | This method is used to reset the positional tracking of the camera. Because this is a simulation camera, and then ZEDSDK is not available, this method will just reset the offsets to zero.

|

| |

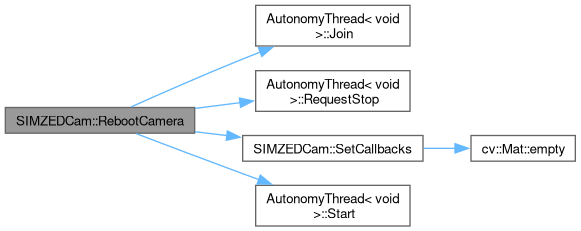

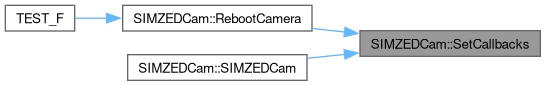

| sl::ERROR_CODE | RebootCamera () override |

| | This method is used to reboot the camera. This method will stop the camera thread, join the camera thread, destroy the camera stream objects, reconstruct the camera stream objects, set the frame callbacks, and restart the camera thread. This simulates a camera reboot since the ZED SDK is not available.

|

| |

| sl::FUSION_ERROR_CODE | SubscribeFusionToCameraUUID (sl::CameraIdentifier &slCameraUUID) override |

| | This method is used to subscribe the sl::Fusion object to the camera with the given UUID. Given that this is a simulation camera, and the ZED SDK is not available, this method will do nothing.

|

| |

| sl::CameraIdentifier | PublishCameraToFusion () override |

| | This method is used to publish the camera to the sl::Fusion object. Since this is a simulation camera this will do nothing.

|

| |

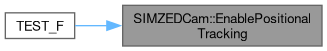

| sl::ERROR_CODE | EnablePositionalTracking (const float fExpectedCameraHeightFromFloorTolerance=constants::ZED_DEFAULT_FLOOR_PLANE_ERROR) override |

| | This method is used to enable positional tracking on the camera. Since this is a simulation camera, this method will just set the member variable and then use the NavBoard to simulate the camera's position.

|

| |

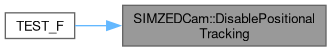

| void | DisablePositionalTracking () override |

| | This method is used to disable positional tracking on the camera. Since this is a simulation camera, this method will just set the member variable.

|

| |

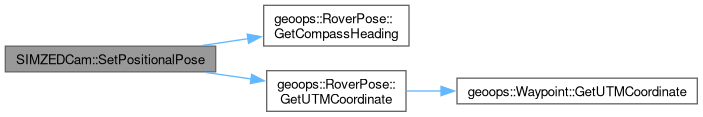

| void | SetPositionalPose (const double dX, const double dY, const double dZ, const double dXO, const double dYO, const double dZO) override |

| | This method is used to set the positional pose of the camera. Since this is a simulation camera, this method will just set the offset member variables.

|

| |

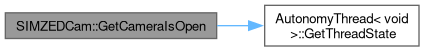

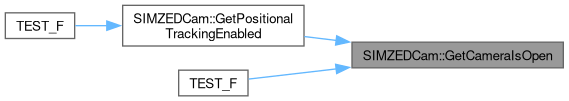

| bool | GetCameraIsOpen () override |

| | Accessor for the camera open status.

|

| |

| bool | GetUsingGPUMem () const override |

| | Returns if the camera is using GPU memory. This is a simulation camera, so this method will always return false.

|

| |

| std::string | GetCameraModel () override |

| | Accessor for the name of this model of camera.

|

| |

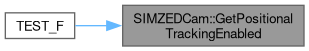

| bool | GetPositionalTrackingEnabled () override |

| | Accessor for the if the camera's positional tracking is enabled. Since this is a simulation camera, this method will just return the member variable.

|

| |

| | ZEDCamera (const int nPropResolutionX, const int nPropResolutionY, const int nPropFramesPerSecond, const double dPropHorizontalFOV, const double dPropVerticalFOV, const bool bEnableRecordingFlag, const bool bMemTypeGPU, const bool bUseHalfDepthPrecision, const bool bEnableFusionMaster, const int nNumFrameRetrievalThreads, const unsigned int unCameraSerialNumber) |

| | Construct a new ZEDCamera object.

|

| |

| virtual | ~ZEDCamera ()=default |

| | Destroy the ZEDCamera object.

|

| |

| virtual std::future< bool > | RequestFrameCopy (cv::cuda::GpuMat &cvGPUFrame) |

| | Puts a frame pointer into a queue so a copy of a frame from the camera can be written to it.

|

| |

| virtual std::future< bool > | RequestDepthCopy (cv::cuda::GpuMat &cvGPUDepth, const bool bRetrieveMeasure=true) |

| | Puts a frame pointer into a queue so a copy of a depth frame from the camera can be written to it.

|

| |

| virtual std::future< bool > | RequestPointCloudCopy (cv::cuda::GpuMat &cvGPUPointCloud) |

| | Puts a frame pointer into a queue so a copy of a point cloud from the camera can be written to it.

|

| |

| virtual std::future< bool > | RequestFloorPlaneCopy (sl::Plane &slFloorPlane) |

| | Puts a FloorPlane pointer into a queue so a copy of a FloorPlane from the camera can be written to it.

|

| |

| virtual std::future< bool > | RequestObjectsCopy (std::vector< sl::ObjectData > &vObjectData) |

| | Puts a vector of ObjectData pointers into a queue so a copy of a vector of ObjectData from the camera can be written to it.

|

| |

| virtual std::future< bool > | RequestBatchedObjectsCopy (std::vector< sl::ObjectsBatch > &vBatchedObjectData) |

| | Puts a vector of ObjectsBatch pointers into a queue so a copy of a vector of ObjectsBatch from the camera can be written to it.

|

| |

| virtual sl::ERROR_CODE | TrackCustomBoxObjects (std::vector< ZedObjectData > &vCustomObjects) |

| | Tracks custom bounding boxes in the camera's field of view.

|

| |

| virtual sl::FUSION_ERROR_CODE | IngestGPSDataToFusion (geoops::GPSCoordinate stNewGPSLocation) |

| | Ingests GPS data into the fusion object.

|

| |

| virtual sl::ERROR_CODE | EnableSpatialMapping () |

| | Enables spatial mapping.

|

| |

| virtual void | DisableSpatialMapping () |

| | Disables spatial mapping.

|

| |

| virtual sl::ERROR_CODE | EnableObjectDetection (const bool bEnableBatching=false) |

| | Enables object detection.

|

| |

| virtual void | DisableObjectDetection () |

| | Disables object detection.

|

| |

| virtual bool | GetIsFusionMaster () const |

| | Accessor for if this ZED is running a fusion instance.

|

| |

| virtual unsigned int | GetCameraSerial () |

| | Accessor for the Camera Serial private member.

|

| |

| virtual sl::PositionalTrackingStatus | GetPositionalTrackingState () |

| | Accessor for the Positional Tracking State private member.

|

| |

| virtual sl::FusedPositionalTrackingStatus | GetFusedPositionalTrackingState () |

| | Accessor for the Fused Positional Tracking State private member.

|

| |

| virtual sl::SPATIAL_MAPPING_STATE | GetSpatialMappingState () |

| | Accessor for the Spatial Mapping State private member.

|

| |

| virtual sl::SPATIAL_MAPPING_STATE | ExtractSpatialMapAsync (std::future< sl::Mesh > &fuMeshFuture) |

| | Puts a Mesh pointer into a queue so a copy of a spatial mapping mesh from the camera can be written to it.

|

| |

| virtual bool | GetObjectDetectionEnabled () |

| | Accessor for the Object Detection Enabled private member.

|

| |

| | Camera (const int nPropResolutionX, const int nPropResolutionY, const int nPropFramesPerSecond, const PIXEL_FORMATS ePropPixelFormat, const double dPropHorizontalFOV, const double dPropVerticalFOV, const bool bEnableRecordingFlag, const int nNumFrameRetrievalThreads=5) |

| | Construct a new Camera object.

|

| |

| virtual | ~Camera () |

| | Destroy the Camera object.

|

| |

| cv::Size | GetPropResolution () const |

| | Accessor for the Prop Resolution private member.

|

| |

| int | GetPropFramesPerSecond () const |

| | Accessor for the Prop Frames Per Second private member.

|

| |

| PIXEL_FORMATS | GetPropPixelFormat () const |

| | Accessor for the Prop Pixel Format private member.

|

| |

| double | GetPropHorizontalFOV () const |

| | Accessor for the Prop Horizontal F O V private member.

|

| |

| double | GetPropVerticalFOV () const |

| | Accessor for the Prop Vertical F O V private member.

|

| |

| bool | GetEnableRecordingFlag () const |

| | Accessor for the Enable Recording Flag private member.

|

| |

| void | SetEnableRecordingFlag (const bool bEnableRecordingFlag) |

| | Mutator for the Enable Recording Flag private member.

|

| |

| | AutonomyThread () |

| | Construct a new Autonomy Thread object.

|

| |

| virtual | ~AutonomyThread () |

| | Destroy the Autonomy Thread object. If the parent object or main thread is destroyed or exited while this thread is still running, a race condition will occur. Stopping and joining the thread here insures that the main program can't exit if the user forgot to stop and join the thread.

|

| |

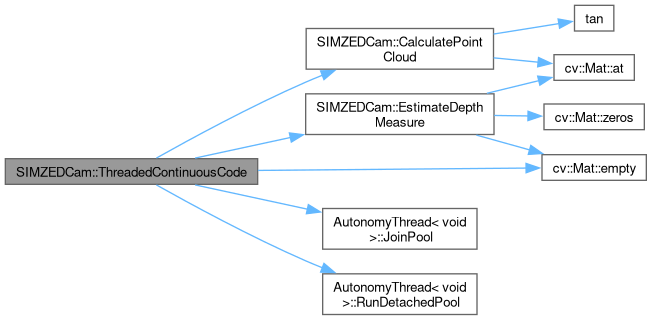

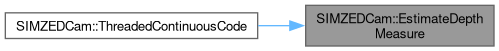

| void | Start () |

| | When this method is called, it starts a new thread that runs the code within the ThreadedContinuousCode method. This is the users main code that will run the important and continuous code for the class.

|

| |

| void | RequestStop () |

| | Signals threads to stop executing user code, terminate. DOES NOT JOIN. This method will not force the thread to exit, if the user code is not written properly and contains WHILE statement or any other long-executing or blocking code, then the thread will not exit until the next iteration.

|

| |

| void | Join () |

| | Waits for thread to finish executing and then closes thread. This method will block the calling code until thread is finished.

|

| |

| bool | Joinable () const |

| | Check if the code within the thread and all pools created by it are finished executing and the thread is ready to be closed.

|

| |

| AutonomyThreadState | GetThreadState () const |

| | Accessor for the Threads State private member.

|

| |

| IPS & | GetIPS () |

| | Accessor for the Frame I P S private member.

|

| |

|

| enum | AutonomyThreadState |

| |

| void | RunPool (const unsigned int nNumTasksToQueue, const unsigned int nNumThreads=2, const bool bForceStopCurrentThreads=false) |

| | When this method is called, it starts/adds tasks to a thread pool that runs nNumTasksToQueue copies of the code within the PooledLinearCode() method using nNumThreads number of threads. This is meant to be used as an internal utility of the child class to further improve parallelization. Default value for nNumThreads is 2.

|

| |

| void | RunDetachedPool (const unsigned int nNumTasksToQueue, const unsigned int nNumThreads=2, const bool bForceStopCurrentThreads=false) |

| | When this method is called, it starts a thread pool full of threads that don't return std::futures (like a placeholder for the thread return type). This means the thread will not have a return type and there is no way to determine if the thread has finished other than calling the Join() method. Only use this if you want to 'set and forget'. It will be faster as it doesn't return futures. Runs PooledLinearCode() method code. This is meant to be used as an internal utility of the child class to further improve parallelization.

|

| |

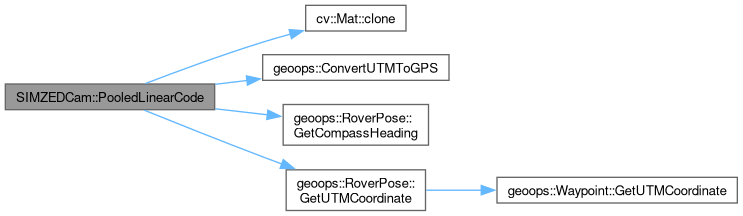

| void | ParallelizeLoop (const int nNumThreads, const N tTotalIterations, F &&tLoopFunction) |

| | Given a ref-qualified looping function and an arbitrary number of iterations, this method will divide up the loop and run each section in a thread pool. This function must not return anything. This method will block until the loop has completed.

|

| |

| void | ClearPoolQueue () |

| | Clears any tasks waiting to be ran in the queue, tasks currently running will remain running.

|

| |

| void | JoinPool () |

| | Waits for pool to finish executing tasks. This method will block the calling code until thread is finished.

|

| |

| bool | PoolJoinable () const |

| | Check if the internal pool threads are done executing code and the queue is empty.

|

| |

| void | SetMainThreadIPSLimit (int nMaxIterationsPerSecond=0) |

| | Mutator for the Main Thread Max I P S private member.

|

| |

| int | GetPoolNumOfThreads () |

| | Accessor for the Pool Num Of Threads private member.

|

| |

| int | GetPoolQueueLength () |

| | Accessor for the Pool Queue Size private member.

|

| |

| std::vector< void > | GetPoolResults () |

| | Accessor for the Pool Results private member. The action of getting results will destroy and remove them from this object. This method blocks if the thread is not finished, so no need to call JoinPool() before getting results.

|

| |

| int | GetMainThreadMaxIPS () const |

| | Accessor for the Main Thread Max I P S private member.

|

| |

|

const std::memory_order | ATOMIC_MEMORY_ORDER_METHOD = std::memory_order_relaxed |

| |

|

unsigned int | m_unCameraSerialNumber |

| |

|

bool | m_bCameraIsFusionMaster |

| |

|

int | m_nPropResolutionX |

| |

|

int | m_nPropResolutionY |

| |

|

int | m_nPropFramesPerSecond |

| |

|

int | m_nNumFrameRetrievalThreads |

| |

|

PIXEL_FORMATS | m_ePropPixelFormat |

| |

|

double | m_dPropHorizontalFOV |

| |

|

double | m_dPropVerticalFOV |

| |

|

std::atomic_bool | m_bEnableRecordingFlag |

| |

|

std::queue< containers::FrameFetchContainer< cv::Mat > > | m_qFrameCopySchedule |

| |

|

std::shared_mutex | m_muPoolScheduleMutex |

| |

|

std::shared_mutex | m_muFrameCopyMutex |

| |

|

IPS | m_IPS |

| |

Public Member Functions inherited from

Public Member Functions inherited from