196{

197 LOG_INFO(logging::g_qSharedLogger, "WebRTC camera {} opening WebSocket to {}...", m_szStreamerID, szSignallingServerURL);

198

199 m_pWebSocket->open(szSignallingServerURL);

200

202

204

205

206 m_pWebSocket->onOpen(

207 [this]()

208 {

209

210 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} WebSocket OPEN. Connected to {}. Sending 'listStreamers'...", m_szStreamerID, m_szSignallingServerURL);

211

212

213 nlohmann::json jsnStreamList;

214 jsnStreamList["type"] = "listStreamers";

215 m_pWebSocket->send(jsnStreamList.dump());

216 });

217

218

219 m_pWebSocket->onClosed(

220 [this]()

221 {

222

223 LOG_INFO(logging::g_qSharedLogger, "WebRTC camera {} WebSocket CLOSED. Disconnected from signalling server.", m_szStreamerID);

224 });

225

226

227 m_pWebSocket->onMessage(

228 [this](std::variant<rtc::binary, rtc::string> rtcMessage)

229 {

230 try

231 {

232

233 nlohmann::json jsnMessage;

234

235

236 if (std::holds_alternative<rtc::string>(rtcMessage))

237 {

238

239 std::string szMessage = std::get<rtc::string>(rtcMessage);

240

241

242 jsnMessage = nlohmann::json::parse(szMessage);

243 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} WS Message (String): {}", m_szStreamerID, szMessage);

244 }

245 else if (std::holds_alternative<rtc::binary>(rtcMessage))

246 {

247

248 rtc::binary rtcBinaryData = std::get<rtc::binary>(rtcMessage);

249

250 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} WS Message (Binary) of length: {}", m_szStreamerID, rtcBinaryData.size());

251

252

253 std::string szBinaryDataStr(reinterpret_cast<const char*>(rtcBinaryData.data()), rtcBinaryData.size());

254

255 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} WS Binary Content: {}", m_szStreamerID, szBinaryDataStr);

256

257 jsnMessage = nlohmann::json::parse(szBinaryDataStr);

258 }

259 else

260 {

261 LOG_ERROR(logging::g_qSharedLogger, "WebRTC camera {} WS Received unknown message type.", m_szStreamerID);

262 }

263

264

265 if (jsnMessage.contains("type"))

266 {

267 std::string szType = jsnMessage["type"];

268

269 if (szType == "config")

270 {

271

272 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} Received 'config': {}", m_szStreamerID, jsnMessage.dump());

273 }

274

275 else if (szType == "offer")

276 {

277

278 std::string sdp = jsnMessage["sdp"];

279 m_pPeerConnection->setRemoteDescription(rtc::Description(sdp, "offer"));

280 LOG_DEBUG(logging::g_qSharedLogger,

281 "WebRTC camera {} Received 'offer'. SDP Length: {}. Setting Remote Description...",

282 m_szStreamerID,

283 sdp.length());

284

285

286 m_pPeerConnection->setLocalDescription();

287 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} Triggered setLocalDescription() to generate answer.", m_szStreamerID);

288 }

289

290 else if (szType == "answer")

291 {

292

293 std::string sdp = jsnMessage["sdp"];

294 m_pPeerConnection->setRemoteDescription(rtc::Description(sdp, "answer"));

295 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} Received 'answer'. Setting Remote Description.", m_szStreamerID);

296 }

297

298 else if (szType == "iceCandidate")

299 {

300

301 nlohmann::json jsnCandidate = jsnMessage["candidate"];

302 std::string szCandidateStr = jsnCandidate["candidate"];

303

304 rtc::Candidate rtcCandidate = rtc::Candidate(szCandidateStr);

305 m_pPeerConnection->addRemoteCandidate(rtcCandidate);

306 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} Received 'iceCandidate'. Added: {}", m_szStreamerID, szCandidateStr);

307 }

308 else if (szType == "streamerList")

309 {

310

311 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} Received 'streamerList': {}", m_szStreamerID, jsnMessage.dump());

312

313

314 if (jsnMessage.contains("ids"))

315 {

316 std::vector<std::string> streamerList = jsnMessage["ids"].get<std::vector<std::string>>();

317 if (std::find(streamerList.begin(), streamerList.end(), m_szStreamerID) != streamerList.end())

318 {

319

320 nlohmann::json jsnStream;

321 jsnStream["type"] = "subscribe";

322 jsnStream["streamerId"] = m_szStreamerID;

323 m_pWebSocket->send(jsnStream.dump());

324

325 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} Streamer ID {} found! Sending 'subscribe'...", m_szStreamerID, m_szStreamerID);

326 }

327 else

328 {

329 LOG_ERROR(logging::g_qSharedLogger, "WebRTC camera {} Streamer ID {} NOT found in streamer list!", m_szStreamerID, m_szStreamerID);

330 }

331 }

332 else

333 {

334 LOG_ERROR(logging::g_qSharedLogger, "WebRTC camera {} Streamer list does not contain 'ids' field!", m_szStreamerID);

335 }

336 }

337 else

338 {

339 LOG_ERROR(logging::g_qSharedLogger, "WebRTC camera {} Unknown message type received: {}", m_szStreamerID, szType);

340 }

341 }

342 }

343 catch (const std::exception& e)

344 {

345

346 LOG_ERROR(logging::g_qSharedLogger, "WebRTC camera {} Exception during Negotiation: {}", m_szStreamerID, e.what());

347 }

348 });

349

350 m_pWebSocket->onError(

351 [this](const std::string& szError)

352 {

353

354 LOG_ERROR(logging::g_qSharedLogger, "WebRTC camera {} WebSocket Error: {}", m_szStreamerID, szError);

355 });

356

358

360

361 m_pPeerConnection->onLocalDescription(

362 [this](rtc::Description rtcDescription)

363 {

364 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} Generated Local Description (Type: {}).", m_szStreamerID, rtcDescription.typeString());

365

366

367 if (rtcDescription.typeString() == "offer")

368 {

369 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} Ignoring 'offer' type in onLocalDescription.", m_szStreamerID);

370 return;

371 }

372

373

374 nlohmann::json jsnConfigMessage;

375 jsnConfigMessage["type"] = "layerPreference";

376 jsnConfigMessage["spatialLayer"] = 0;

377 jsnConfigMessage["temporalLayer"] = 0;

378 jsnConfigMessage["playerId"] = "";

379 m_pWebSocket->send(jsnConfigMessage.dump());

380 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC Sent 'layerPreference'.");

381

382

383 nlohmann::json jsnMessage;

384 jsnMessage["type"] = rtcDescription.typeString();

385

386 jsnMessage["minBitrateBps"] = 0;

387 jsnMessage["maxBitrateBps"] = 0;

388

389 std::string szSDP = rtcDescription.generateSdp();

390

391 std::string szMungedSDP =

392 std::regex_replace(szSDP, std::regex("(a=fmtp:\\d+ level-asymmetry-allowed=.*)\r\n"), "$1;x-google-start-bitrate=10000;x-google-max-bitrate=100000\r\n");

393 jsnMessage["sdp"] = szMungedSDP;

394

395 m_pWebSocket->send(jsnMessage.dump());

396

397

398 LOG_INFO(logging::g_qSharedLogger, "WebRTC camera {} Sent Local Description to Server (Munged SDP).", m_szStreamerID);

399 });

400

401 m_pPeerConnection->onTrack(

402 [this](std::shared_ptr<rtc::Track> rtcTrack)

403 {

404

405 rtc::Description::Media rtcMediaDescription = rtcTrack->description();

406

407 std::string szMediaType = rtcMediaDescription.type();

408

409 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} onTrack triggered. Media Type: {}", m_szStreamerID, szMediaType);

410

411

412 if (szMediaType != "video")

413 {

414 LOG_DEBUG(logging::g_qSharedLogger, "WebRTC camera {} Ignoring non-video track.", m_szStreamerID);

415 return;

416 }

417

418

419 m_pVideoTrack1 = rtcTrack;

420

421

422 m_pTrack1H264DepacketizationHandler = std::make_shared<rtc::H264RtpDepacketizer>(rtc::NalUnit::Separator::LongStartSequence);

423 m_pTrack1RtcpReceivingSession = std::make_shared<rtc::RtcpReceivingSession>();

424 m_pTrack1H264DepacketizationHandler->addToChain(m_pTrack1RtcpReceivingSession);

425 m_pVideoTrack1->setMediaHandler(m_pTrack1H264DepacketizationHandler);

426

427 LOG_INFO(logging::g_qSharedLogger, "WebRTC camera {} Video Track Handler Configured. Waiting for frames...", m_szStreamerID);

428

429

430 m_pVideoTrack1->onFrame(

431 [this](rtc::binary rtcBinaryMessage, rtc::FrameInfo rtcFrameInfo)

432 {

433

434 if (rtcBinaryMessage.empty())

435 {

436 return;

437 }

438

439

440 std::vector<uint8_t> vH264EncodedBytes;

441

442 vH264EncodedBytes.reserve(rtcBinaryMessage.size() + 16 + AV_INPUT_BUFFER_PADDING_SIZE);

443

444 if (rtcFrameInfo.payloadType == 96)

445 {

446

447

448

449 const uint8_t* pData =

reinterpret_cast<const uint8_t*

>(rtcBinaryMessage.data());

450 vH264EncodedBytes.insert(vH264EncodedBytes.end(), pData, pData + rtcBinaryMessage.size());

451 }

452 else if (rtcFrameInfo.payloadType == 97)

453 {

454

455

456

457

458

459 if (rtcBinaryMessage.size() <= 2)

460 return;

461

462

463 vH264EncodedBytes.push_back(0);

464 vH264EncodedBytes.push_back(0);

465 vH264EncodedBytes.push_back(0);

466 vH264EncodedBytes.push_back(1);

467

468

469 const uint8_t* pData =

reinterpret_cast<const uint8_t*

>(rtcBinaryMessage.data());

470 vH264EncodedBytes.insert(vH264EncodedBytes.end(), pData + 2, pData + rtcBinaryMessage.size());

471 }

472 else

473 {

474

475 return;

476 }

477

478

479 vH264EncodedBytes.insert(vH264EncodedBytes.end(), AV_INPUT_BUFFER_PADDING_SIZE, 0);

480

481

482 std::unique_lock<std::shared_mutex> lkDecoderLock(m_muDecoderMutex);

484

485 if (bDecoded && m_fnOnFrameReceivedCallback)

486 {

487 m_fnOnFrameReceivedCallback(m_cvFrame);

488 }

489 lkDecoderLock.unlock();

490 });

491 });

492

493 m_pPeerConnection->onGatheringStateChange(

494 [this](rtc::PeerConnection::GatheringState eGatheringState)

495 {

496

497 switch (eGatheringState)

498 {

499 case rtc::PeerConnection::GatheringState::Complete:

500 LOG_DEBUG(logging::g_qSharedLogger, "Camera {} PeerConnection ICE gathering state changed to: Complete", m_szStreamerID);

501 break;

502 case rtc::PeerConnection::GatheringState::InProgress:

503 LOG_DEBUG(logging::g_qSharedLogger, "Camera {} PeerConnection ICE gathering state changed to: InProgress", m_szStreamerID);

504 break;

505 case rtc::PeerConnection::GatheringState::New:

506 LOG_DEBUG(logging::g_qSharedLogger, "Camera {} PeerConnection ICE gathering state changed to: New", m_szStreamerID);

507 break;

508 default: LOG_DEBUG(logging::g_qSharedLogger, "Camera {} Peer connection ICE gathering state changed to: Unknown", m_szStreamerID); break;

509 }

510 });

511

512 m_pPeerConnection->onIceStateChange(

513 [this](rtc::PeerConnection::IceState eIceState)

514 {

515

516 switch (eIceState)

517 {

518 case rtc::PeerConnection::IceState::Checking:

519 LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection ICE state changed to: Checking", m_szStreamerID);

520 break;

521 case rtc::PeerConnection::IceState::Closed:

522 LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection ICE state changed to: Closed", m_szStreamerID);

523 break;

524 case rtc::PeerConnection::IceState::Completed:

525 LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection ICE state changed to: Completed", m_szStreamerID);

526 break;

527 case rtc::PeerConnection::IceState::Connected:

528 LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection ICE state changed to: Connected", m_szStreamerID);

529 break;

530 case rtc::PeerConnection::IceState::Disconnected:

531 LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection ICE state changed to: Disconnected", m_szStreamerID);

532 break;

533 case rtc::PeerConnection::IceState::Failed:

534 LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection ICE state changed to: Failed", m_szStreamerID);

535 break;

536 case rtc::PeerConnection::IceState::New: LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection ICE state changed to: New", m_szStreamerID); break;

537 default: LOG_INFO(logging::g_qSharedLogger, "Camera {} Peer connection ICE state changed to: Unknown", m_szStreamerID); break;

538 }

539 });

540 m_pPeerConnection->onSignalingStateChange(

541 [this](rtc::PeerConnection::SignalingState eSignalingState)

542 {

543

544 switch (eSignalingState)

545 {

546 case rtc::PeerConnection::SignalingState::HaveLocalOffer:

547 LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection signaling state changed to: HaveLocalOffer", m_szStreamerID);

548 break;

549 case rtc::PeerConnection::SignalingState::HaveLocalPranswer:

550 LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection signaling state changed to: HaveLocalPranswer", m_szStreamerID);

551 break;

552 case rtc::PeerConnection::SignalingState::HaveRemoteOffer:

553 LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection signaling state changed to: HaveRemoteOffer", m_szStreamerID);

554 break;

555 case rtc::PeerConnection::SignalingState::HaveRemotePranswer:

556 LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection signaling state changed to: HaveRemotePrAnswer", m_szStreamerID);

557 break;

558 case rtc::PeerConnection::SignalingState::Stable:

559 LOG_INFO(logging::g_qSharedLogger, "Camera {} PeerConnection signaling state changed to: Stable", m_szStreamerID);

560 break;

561 default: LOG_INFO(logging::g_qSharedLogger, "Camera {} Peer connection signaling state changed to: Unknown", m_szStreamerID); break;

562 }

563 });

564

565 m_pPeerConnection->onStateChange(

566 [this](rtc::PeerConnection::State eState)

567 {

568

569 switch (eState)

570 {

571 case rtc::PeerConnection::State::Closed: LOG_INFO(logging::g_qSharedLogger, "Camera {} Peer connection state changed to: Closed", m_szStreamerID); break;

572 case rtc::PeerConnection::State::Connected:

573 LOG_INFO(logging::g_qSharedLogger, "Camera {} Peer connection state changed to: Connected", m_szStreamerID);

574 break;

575 case rtc::PeerConnection::State::Connecting:

576 LOG_INFO(logging::g_qSharedLogger, "Camera {} Peer connection state changed to: Connecting", m_szStreamerID);

577 break;

578 case rtc::PeerConnection::State::Disconnected:

579 LOG_INFO(logging::g_qSharedLogger, "Camera {} Peer connection state changed to: Disconnected", m_szStreamerID);

580 break;

581 case rtc::PeerConnection::State::Failed: LOG_INFO(logging::g_qSharedLogger, "Camera {} Peer connection state changed to: Failed", m_szStreamerID); break;

582 case rtc::PeerConnection::State::New: LOG_INFO(logging::g_qSharedLogger, "Camera {} Peer connection state changed to: New", m_szStreamerID); break;

583 default: LOG_INFO(logging::g_qSharedLogger, "Camera {} Peer connection state changed to: Unknown", m_szStreamerID); break;

584 }

585 });

586

588

590

591 m_pDataChannel->onOpen(

592 [this]()

593 {

594

595 LOG_INFO(logging::g_qSharedLogger, "Camera {} WebRTC Data channel OPENED.", m_szStreamerID);

596

597

598 m_pDataChannel->send(std::string(1, static_cast<char>(1)));

599

600

601

602

603 LOG_INFO(logging::g_qSharedLogger, "Camera {} WebRTC Sending Encoder Configuration to Simulator...", m_szStreamerID);

604

605

607

608

611

612

615

616

618 });

619

620 m_pDataChannel->onMessage(

621 [this](std::variant<rtc::binary, rtc::string> rtcMessage)

622 {

623 try

624 {

625

626 nlohmann::json jsnMessage;

627

628

629 if (std::holds_alternative<rtc::string>(rtcMessage))

630 {

631

632 std::string szMessage = std::get<rtc::string>(rtcMessage);

633

634

635 jsnMessage = nlohmann::json::parse(szMessage);

636 LOG_DEBUG(logging::g_qSharedLogger, "Camera {} DATA_CHANNEL Received message from peer: {}", m_szStreamerID, szMessage);

637 }

638 else if (std::holds_alternative<rtc::binary>(rtcMessage))

639 {

640

641 rtc::binary rtcBinaryData = std::get<rtc::binary>(rtcMessage);

642

643

644 std::string szBinaryDataStr;

645 for (auto byte : rtcBinaryData)

646 {

647 if (std::isprint(static_cast<unsigned char>(byte)))

648 {

649 szBinaryDataStr += static_cast<char>(byte);

650 }

651 }

652

653

654 LOG_DEBUG(logging::g_qSharedLogger,

655 "Camera {} DATA_CHANNEL Received binary data ({} bytes): {}",

656 m_szStreamerID,

657 rtcBinaryData.size(),

658 szBinaryDataStr);

659 }

660 else

661 {

662 LOG_ERROR(logging::g_qSharedLogger, "Camera {} Received unknown message type from peer", m_szStreamerID);

663 }

664 }

665 catch (const std::exception& e)

666 {

667

668 LOG_ERROR(logging::g_qSharedLogger, "Camera {} Error occurred while negotiating with the datachannel: {}", m_szStreamerID, e.what());

669 }

670 });

671

672 return true;

673}

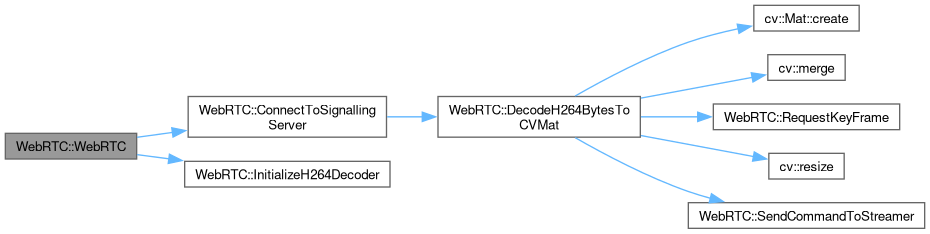

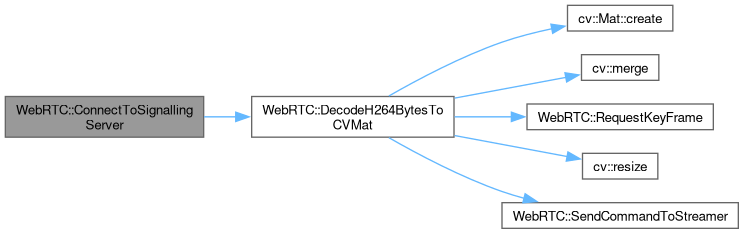

bool SendCommandToStreamer(const std::string &szCommand)

This method sends a command to the streamer via the data channel. The command is a JSON string that i...

Definition WebRTC.cpp:898

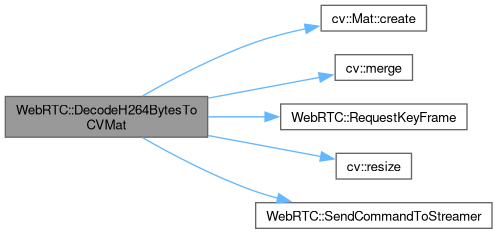

bool DecodeH264BytesToCVMat(const std::vector< uint8_t > &vH264EncodedBytes, cv::Mat &cvDecodedFrame, const AVPixelFormat eOutputPixelFormat)

Decodes H264 encoded bytes to a cv::Mat using FFmpeg.

Definition WebRTC.cpp:746