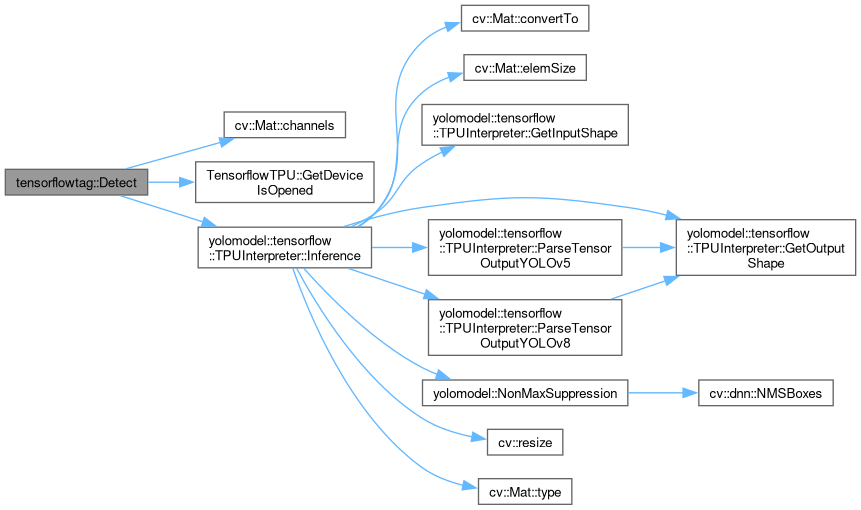

Detect ArUco tags in the provided image using a YOLO DNN model.

98 {

99

101 {

102

103 LOG_ERROR(logging::g_qSharedLogger, "Detect() requires a RGB image.");

104 return {};

105 }

106

107

108 std::vector<TensorflowTag> vDetectedTags;

109

110

112 {

113

114 std::vector<std::vector<yolomodel::Detection>> vOutputTensorTags = tfTensorflowDetector.

Inference(cvFrame, fMinObjectConfidence, fNMSThreshold);

115

116

117 for (std::vector<yolomodel::Detection> vTagDetections : vOutputTensorTags)

118 {

119

121 {

122

123 TensorflowTag stDetectedTag;

124 stDetectedTag.dConfidence = stTagDetection.fConfidence;

125 stDetectedTag.CornerTL =

cv::Point2f(stTagDetection.cvBoundingBox.x, stTagDetection.cvBoundingBox.y);

126 stDetectedTag.CornerTR =

cv::Point2f(stTagDetection.cvBoundingBox.x + stTagDetection.cvBoundingBox.width, stTagDetection.cvBoundingBox.y);

127 stDetectedTag.CornerBL =

cv::Point2f(stTagDetection.cvBoundingBox.x, stTagDetection.cvBoundingBox.y + stTagDetection.cvBoundingBox.height);

128 stDetectedTag.CornerBR =

cv::Point2f(stTagDetection.cvBoundingBox.x + stTagDetection.cvBoundingBox.width,

129 stTagDetection.cvBoundingBox.y + stTagDetection.cvBoundingBox.height);

130

131

132 vDetectedTags.emplace_back(stDetectedTag);

133 }

134 }

135 }

136 else

137 {

138

139 LOG_WARNING(logging::g_qSharedLogger,

140 "TensorflowDetect: Unable to detect tags using YOLO tensorflow detection because hardware is not opened or model is not initialized.");

141 }

142

143

144 return vDetectedTags;

145 }

bool GetDeviceIsOpened() const

Accessor for the Device Is Opened private member.

Definition TensorflowTPU.hpp:347

std::vector< std::vector< Detection > > Inference(const cv::Mat &cvInputFrame, const float fMinObjectConfidence=0.85, const float fNMSThreshold=0.6) override

Given an input image forward the image through the YOLO model to run inference on the EdgeTPU,...

Definition YOLOModel.hpp:270

This struct is used to.

Definition YOLOModel.hpp:42