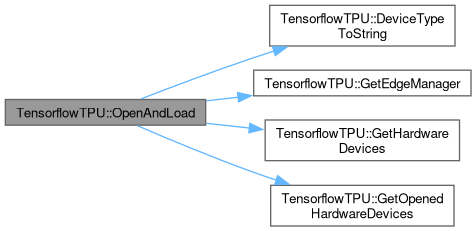

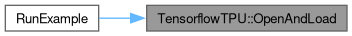

Attempt to open the model at the given path and load it onto the EdgeTPU device.

166 {

167

168 TfLiteStatus tfReturnStatus = TfLiteStatus::kTfLiteCancelled;

169 std::vector<edgetpu::EdgeTpuManager::DeviceEnumerationRecord> vValidDevices;

170

171

172 switch (eDeviceType)

173 {

174 case DeviceType::eAuto: m_tpuDevice.type = edgetpu::DeviceType(-1); break;

175 case DeviceType::ePCIe: m_tpuDevice.type = edgetpu::DeviceType::kApexPci; break;

176 case DeviceType::eUSB: m_tpuDevice.type = edgetpu::DeviceType::kApexUsb; break;

177 default: m_tpuDevice.type = edgetpu::DeviceType(-1); break;

178 }

179

180

181 m_pTFLiteModel = tflite::FlatBufferModel::VerifyAndBuildFromFile(m_szModelPath.c_str());

182

183 if (m_pTFLiteModel != nullptr)

184 {

185

186 std::vector<edgetpu::EdgeTpuManager::DeviceEnumerationRecord> vDevices = this->

GetHardwareDevices();

188

189

190

191 for (unsigned int unIter = 0; unIter < vDevices.size(); ++unIter)

192 {

193

194 bool bValidDevice = true;

195

196

197 for (unsigned int nJter = 0; nJter < vAlreadyOpenedDevices.size(); ++nJter)

198 {

199

200 if (vAlreadyOpenedDevices[nJter]->GetDeviceEnumRecord().path == vDevices[unIter].path)

201 {

202

203 bValidDevice = false;

204 }

205

206 else if (eDeviceType != DeviceType::eAuto)

207 {

208

209 if (vDevices[unIter].type != m_tpuDevice.type)

210 {

211

212 bValidDevice = false;

213 }

214 }

215 }

216

217

218 if (bValidDevice)

219 {

220

221 vValidDevices.emplace_back(vDevices[unIter]);

222 }

223 }

224

225

226 if (vValidDevices.size() > 0)

227 {

228

229 for (unsigned int unIter = 0; unIter < vValidDevices.size() && !m_bDeviceOpened; ++unIter)

230 {

231

232 LOG_INFO(logging::g_qSharedLogger,

233 "Attempting to load {} onto {} device at {} ({})...",

234 m_szModelPath,

236 vValidDevices[unIter].path,

238

239

240 m_pEdgeTPUContext = this->

GetEdgeManager()->OpenDevice(vValidDevices[unIter].type, vValidDevices[unIter].path, m_tpuDeviceOptions);

241

242

243 if (m_pEdgeTPUContext != nullptr && m_pEdgeTPUContext->IsReady())

244 {

245

246 tflite::ops::builtin::BuiltinOpResolverWithXNNPACK tfResolver;

247 tfResolver.AddCustom(edgetpu::kCustomOp, edgetpu::RegisterCustomOp());

248

249 if (tflite::InterpreterBuilder(*m_pTFLiteModel, tfResolver)(&m_pInterpreter) != kTfLiteOk)

250 {

251

252 LOG_ERROR(logging::g_qSharedLogger,

253 "Unable to build interpreter for model {} with device {} ({})",

254 m_szModelPath,

255 vValidDevices[unIter].path,

257

258

259 m_pInterpreter.reset();

260 m_pEdgeTPUContext.reset();

261

262

263 tfReturnStatus = TfLiteStatus::kTfLiteUnresolvedOps;

264 }

265 else

266 {

267

268 m_pInterpreter->SetExternalContext(kTfLiteEdgeTpuContext, m_pEdgeTPUContext.get());

269

270 if (m_pInterpreter->AllocateTensors() != kTfLiteOk)

271 {

272

273 LOG_WARNING(logging::g_qSharedLogger,

274 "Even though device was opened and interpreter was built, allocation of tensors failed for model {} with device {} ({})",

275 m_szModelPath,

276 vValidDevices[unIter].path,

278

279

280 m_pInterpreter.reset();

281 m_pEdgeTPUContext.reset();

282

283

284 tfReturnStatus = TfLiteStatus::kTfLiteDelegateDataWriteError;

285 }

286 else

287 {

288

289 LOG_INFO(logging::g_qSharedLogger,

290 "Successfully opened and loaded model {} with device {} ({})",

291 m_szModelPath,

292 vValidDevices[unIter].path,

294

295

296 m_bDeviceOpened = true;

297

298

299 tfReturnStatus = TfLiteStatus::kTfLiteOk;

300 }

301 }

302 }

303 else

304 {

305

306 LOG_ERROR(logging::g_qSharedLogger,

307 "Unable to open device {} ({}) for model {}.",

308 vValidDevices[unIter].path,

310 m_szModelPath);

311 }

312 }

313 }

314 else

315 {

316

317 LOG_ERROR(logging::g_qSharedLogger,

318 "No valid devices were found for model {}. Device type is {}",

319 m_szModelPath,

321 }

322 }

323 else

324 {

325

326 LOG_ERROR(logging::g_qSharedLogger, "Unable to load model {}. Does it exist at this path? Is this actually compiled for the EdgeTPU?", m_szModelPath);

327 }

328

329

330 return tfReturnStatus;

331 }

edgetpu::EdgeTpuManager * GetEdgeManager()

Retrieves a pointer to an EdgeTPUManager instance from the libedgetpu library.

Definition TensorflowTPU.hpp:420

static std::vector< edgetpu::EdgeTpuManager::DeviceEnumerationRecord > GetHardwareDevices()

Retrieve a list of EdgeTPU devices from the edge API.

Definition TensorflowTPU.hpp:361

static std::vector< std::shared_ptr< edgetpu::EdgeTpuContext > > GetOpenedHardwareDevices()

Retrieve a list of already opened EdgeTPU devices from the edge API.

Definition TensorflowTPU.hpp:388

std::string DeviceTypeToString(edgetpu::DeviceType eDeviceType)

to_string method for converting a device type to a readable string.

Definition TensorflowTPU.hpp:445